Markov Chain Monte Carlo

import pandas as pd

import numpy as np

from scipy import stats

import matplotlib.pyplot as plt

import seaborn as sns

import preliz as pz

from tqdm.auto import tqdm

plt.rcParams["figure.figsize"] = (15,3)

plt.style.use('ggplot')Exercise 1: Single-chain Metropolis algorithm from scratch¶

The Metropolis algorithm is a very good start into the world of Markov Chain Monte Carlo. Even though it is less effective than Hamiltonian MCMC or NUTS, its simplicity allows easy investigation of the principles of MCMC algorithms. In this exercise you will build the Metropolis algorithm from scratch and apply it to a problem with binomial likelihood. We’ll still use the Beta prior from last week, but pretend we don’t know the analytical solution yet (of course we’ll use it to verify whether our implementation works correctly so that later we can work with arbitrary priors).

Problem to solve: Given , , and

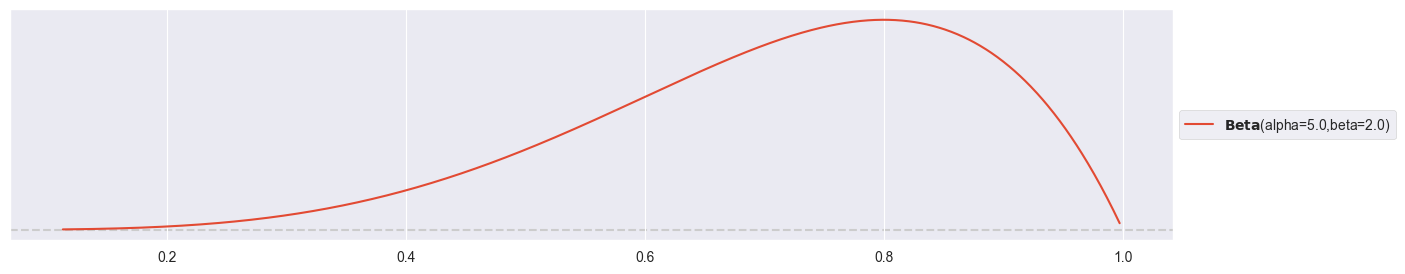

(prior)

(likelihood)

(posterior)

Problem definition:

alpha = 5

beta = 2

n = 10

k = 8a) Proposal distribution

You will use a Gaussian jump proposal distribution for this particular implementation. Implement a Python function propose_jump(pi, sigma), where pi is the current value in the chain and sigma is the standard deviation of the proposal distribution. Use scipy.stats.norm.rvs() to propose a new value that is returned by the function.

def propose_jump( pi, sigma ):

return stats.norm.rvs( loc=pi, scale=sigma )

propose_jump( 0.3, 0.1 )0.3145445223454777b) Random walk

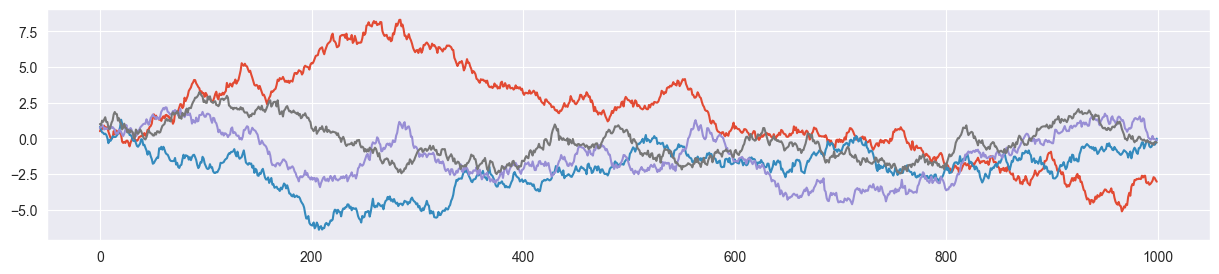

Create a simple (Markovian) Monte Carlo chain using your propose_jump() function. Initialize your chain with a random value for from the interval and accept all proposed jumps. Do this for 1000 iterations with a standard deviation of sigma = 0.2. Plot the values of the resulting chain (random walk). Run your code multiple times and look at the different random walks you produced.

Hint: Make sure you clip the proposed values to a range between 0 and 1. The proposal distribution is not aware of the fact that is bounded between 0 and 1.

def plot_random_walk( sigma=0.2 ):

pi = np.random.rand()

chain = []

for i in range(1000):

pi = propose_jump( pi, sigma=sigma )

chain.append( pi )

plt.plot( chain )

plot_random_walk( sigma=0.2 )

plot_random_walk( sigma=0.2 )

plot_random_walk( sigma=0.2 )

plot_random_walk( sigma=0.2 )

c) Jump decision

In the Metropolis algorithm (assuming a symmetric proposal function), the classic random walk is nudged towards our posterior by applying a decision rule for whether a proposed jump is accepted. This rule is the following:

Given are the current value in the chain and the proposed value . Jump to with the probability

Once you have computed (Hint: use stats.beta.pdf() and stats.binom.pmf()), you may sample a value (using np.random.rand()) and use the following decision rule:

If return the proposed value (accept)

If return the original value (reject)

Write a function decide(pi, pi2, alpha, beta, n, k), where pi is the current value in the chain, pi2 is the proposed value by propose_jump(pi, sigma), alpha and beta define the beta prior and n and k describe the measured binomially distributed data. Test your function by defining a fixed value for pi, proposing a jump pi2 with your propose_jump() function, run it through decide() and verify that it sometimes returns pi and sometimes the proposed value.

Hints:

You may print out or return to verify whether your code works correctly.

The jump proposal function might propose a value of outside of its domain . Make sure that in that case the proposal is rejected.

def decide( pi, pi2, alpha, beta, n, k, debug=False ):

# evaluate prior and likelihood

prior1 = stats.beta.pdf( pi, alpha, beta )

prior2 = stats.beta.pdf( pi2, alpha, beta )

likelihood1 = stats.binom.pmf( n=n, k=k, p=pi )

likelihood2 = stats.binom.pmf( n=n, k=k, p=pi2 )

# define alpha

alpha = min( 1, prior2/prior1 * likelihood2/likelihood1 )

if debug: print("pi = {:.5f}, pi2 = {:.5f}, alpha = {:.5f}".format(pi, pi2, alpha))

# jump decision

p = np.random.rand()

if p<=alpha and 0 < pi2 < 1:

return pi2

else:

return pi

pi = 0.2

pi2 = propose_jump( pi, sigma=0.2 )

decide( pi, pi2, alpha, beta, n, k , debug=True)pi = 0.20000, pi2 = 0.53515, alpha = 1.00000

0.5351546635619853d) Single-chain MCMC

Now it’s time to put everything together to have your own implementation of the Metropolis algorithm! Create an empty list called chain, randomly sample an initial value for between 0 and 1 and use a for loop to iteratively apply your propose_jump() and decide() functions n_steps times. Make sure you store the current value of pi in chain in each step.

Encapsulate your code in a function metropolis_chain(n_steps, alpha, beta, n, k, sigma) that returns the final chain as a list with n_steps entries. Generate a chain with 1000 steps (samples).

def metropolis_chain( n_steps, alpha, beta, n, k, sigma ):

# initialize

pi = np.random.rand()

chain = []

accepted = 0

# create chain

for i in tqdm( range( n_steps ) ):

pi2 = propose_jump( pi, sigma )

pi = decide( pi, pi2, alpha, beta, n, k )

chain.append( pi )

return chain

chain = metropolis_chain( 1000, alpha, beta, n, k, sigma=0.2 )

len( chain )1000e) Verification

You may now verify whether your metropolis_chain() function works correctly. To this end, perform the following steps:

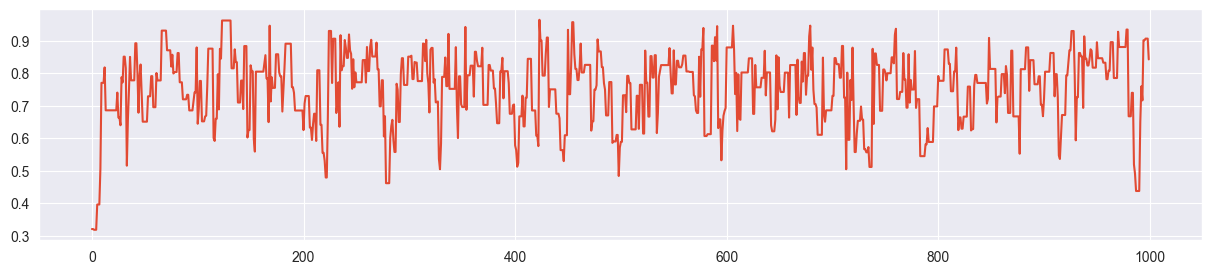

Hyperparameter tuning: Visualize your chain in a trace plot by simply plotting the evolution of your chain values. Does the trace plot look alright? If the chain is mixing slowly (acceptance rate too high), increase

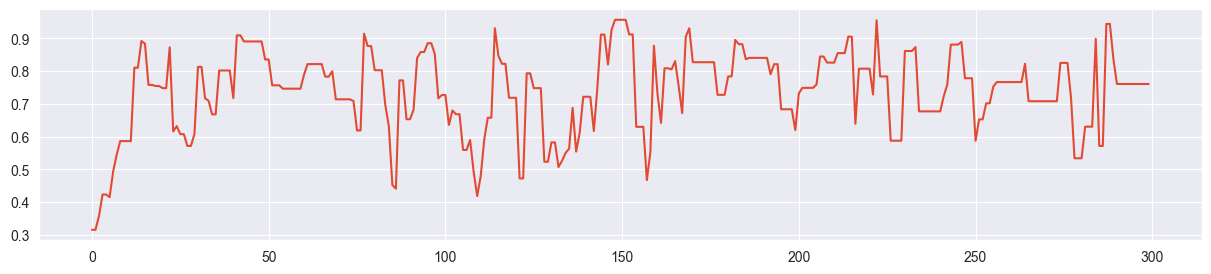

sigma, if the chain is often stuck at a value (acceptance rate too low), decreasesigma. Before you continue, make sure that your trace plot looks ok.Visualize distribution: Now visualize the distribution of your samples using a histogram. Thereby make sure, that your histogram shows a density instead of absolute counts. Overplot the density with the PMF of a distribution (as we computed it in closed form last week). Does the distribution of the samples more or less match the closed-form distribution?

Trace plot:

plt.plot( chain )

(looks ok - otherwise tune as done in the next part)

Step 1 (hyperparameter tuning): Try out other values of sigma

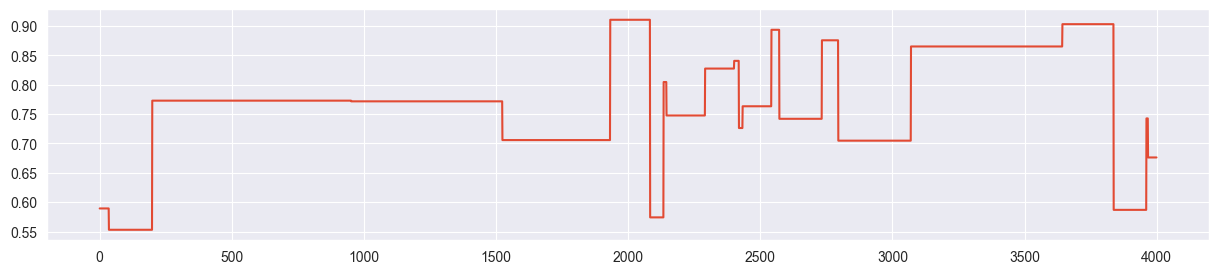

chain = metropolis_chain( 1000, alpha, beta, n, k, sigma=0.01 )

plt.plot( chain )

Example for slow mixing - sigma is too small and the chain explores the parameter space only slowly.

chain = metropolis_chain( 1000, alpha, beta, n, k, sigma=0.5 )

plt.plot( chain )

The chain is often stuck. High rejection rate because sigma is too large and most of the proposals land far away from the mass of the posterior.

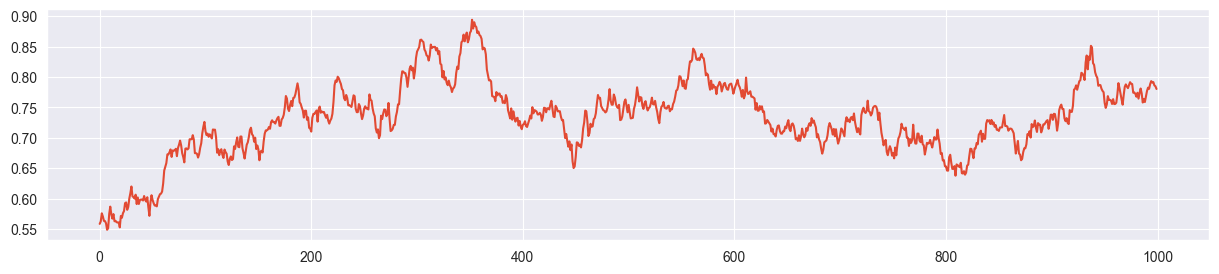

sigma = 0.2 seems more or less ok:

chain = metropolis_chain( 1000, alpha, beta, n, k, sigma=0.2 )

plt.plot( chain )

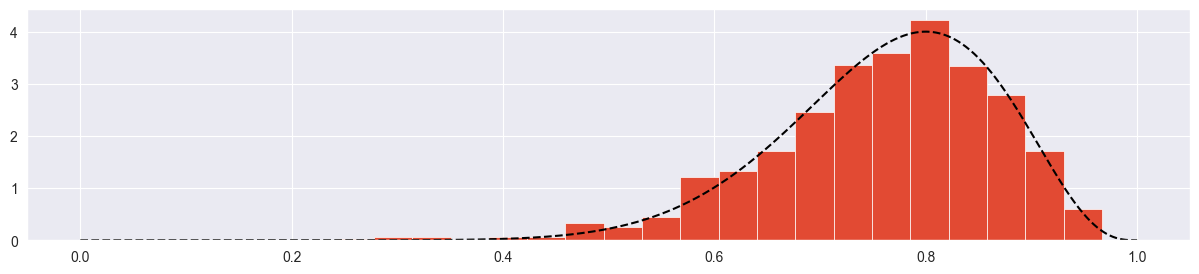

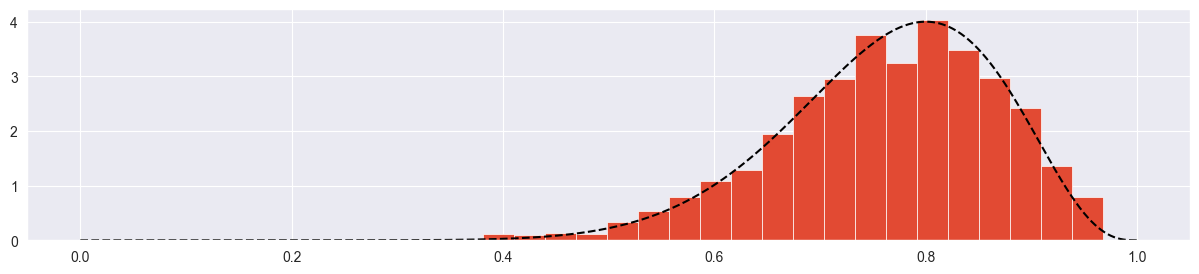

Step 2: Visualize distribution

# histogram

plt.hist( chain, density=True, bins=20 )

# true posterior

pi_range = np.linspace(0, 1, 1000)

true_posterior = [stats.beta.pdf(pi, alpha+k, beta+n-k) for pi in pi_range]

plt.plot( pi_range, true_posterior, c="black", ls="--" )

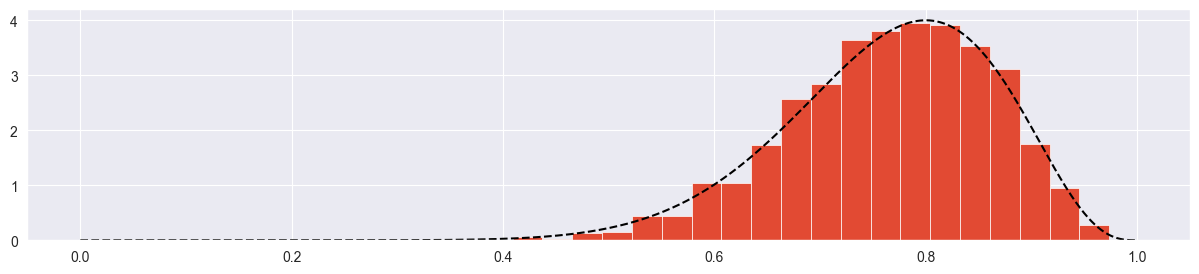

Seems more or less ok! Try with more samples:

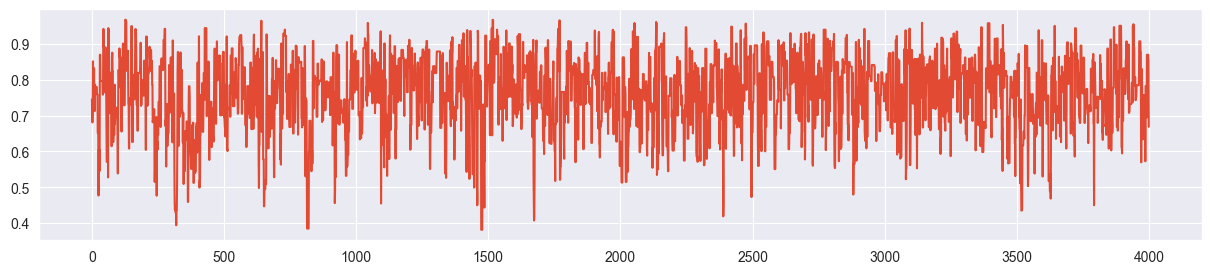

chain = metropolis_chain( 4000, alpha, beta, n, k, sigma=0.2 )

plt.plot( chain )

plt.hist( chain, density=True, bins=20 )

plt.plot( pi_range, true_posterior, c="black", ls="--" )

Looks good!

f) Playing around

If you have not done this yet: Play around with different values for the proposal jump distribution standard deviation sigma. What is the effect on the trace plot and the histogram (see previous part) if you set sigma too small, too high or just right? What tells you that you chose sigma just right? (apart from the true closed-form posterior distribution).

sigma much too low:

chain = metropolis_chain( 4000, alpha, beta, n, k, sigma=0.002 )

plt.plot( chain )

plt.hist( chain, density=True, bins=20 )

plt.plot( pi_range, true_posterior, c="black", ls="--" )

Chain explores only a small part of the parameter space! Acceptance rate is very high because chain always climbs up towards the mode with very small steps. However it barely reaches it.

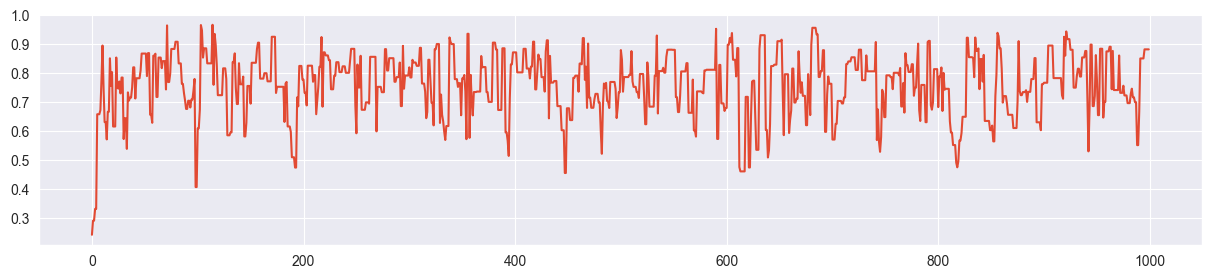

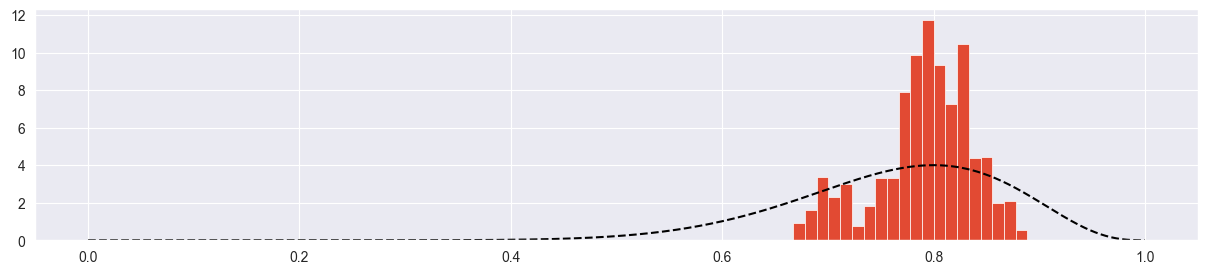

sigma much too high:

chain = metropolis_chain( 4000, alpha, beta, n, k, sigma=20 )

plt.plot( chain )

plt.hist( chain, density=True, bins=20 )

plt.plot( pi_range, true_posterior, c="black", ls="--" )

Chain is mostly stuck at the same point and most of the proposals are rejected. Because of this, we only get a few independent samples and the density is concentrated and exaggerated around the points where the chain was stuck.

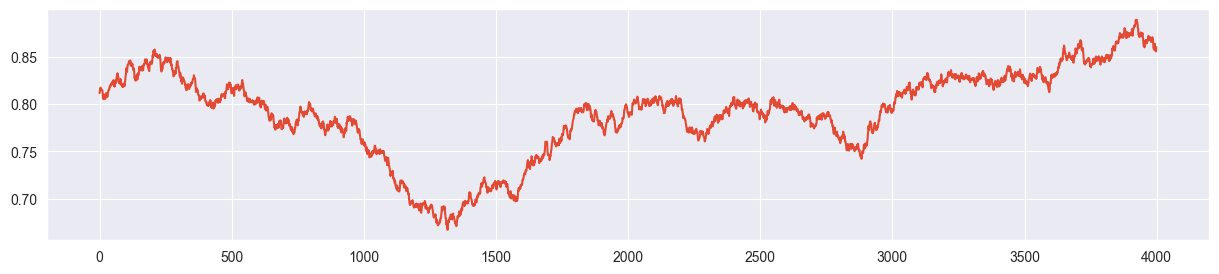

sigma just right:

chain = metropolis_chain( 4000, alpha, beta, n, k, sigma=0.2 )

plt.plot( chain )

plt.hist( chain, density=True, bins=20 )

plt.plot( pi_range, true_posterior, c="black", ls="--" )

The trace plot looks completely random (not stuck, not creeping), the true distribution is approximated quite nicely.

Exercise 2: Mult-chain Metropolis algorithm¶

In the previous exercise you used the trace plot as a diagnostic for whether your chain is healthy (fast mixing). Here we investigate another instrument: density plots of multiple chains.

a) Multiple chains

Write a function metropolis(n_chains, n_steps, alpha, beta, n, k, sigma) that runs n_chains different chains (let’s start with 4) using your metropolis_chain() function from the previous exercise. Your function should return a numpy array of dimension (n_steps, n_chains) that you can then use in the remaining parts of the exercise.

def metropolis( n_chains, n_steps, alpha, beta, n, k, sigma ):

res = [metropolis_chain( n_steps, alpha, beta, n, k, sigma ) for i in range( n_chains)]

return np.array(res).T # to have chains in columns

sim = metropolis( 4, 4000, alpha, beta, n, k, sigma=0.2 )

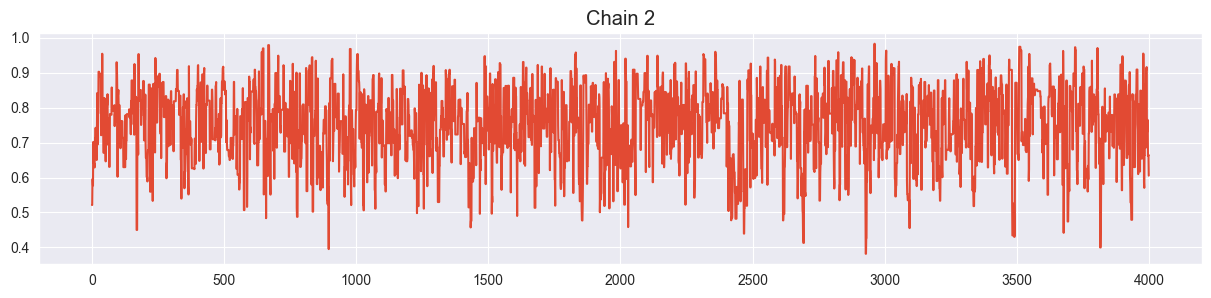

sim.shape(4000, 4)b) Trace plots

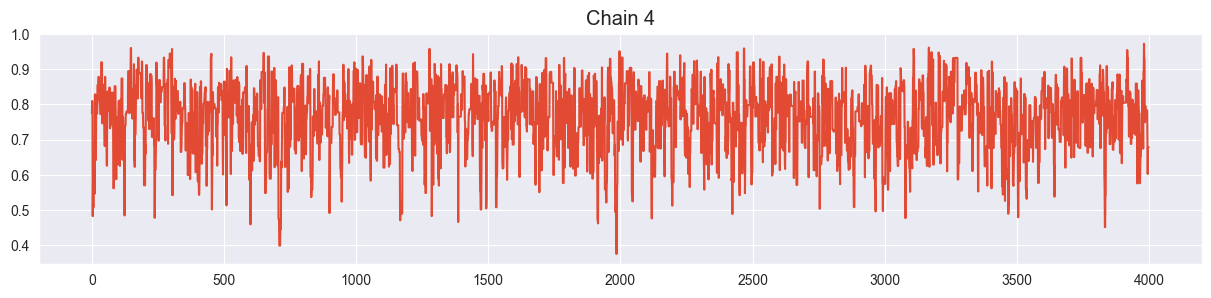

Visualize all four trace plots of your chains, use your best value for sigma from the previous exercise. Does everything look ok?

for i in range( sim.shape[1] ):

plt.figure()

plt.plot( sim[:,i] )

plt.title("Chain {}".format(i+1))

Looks ok!

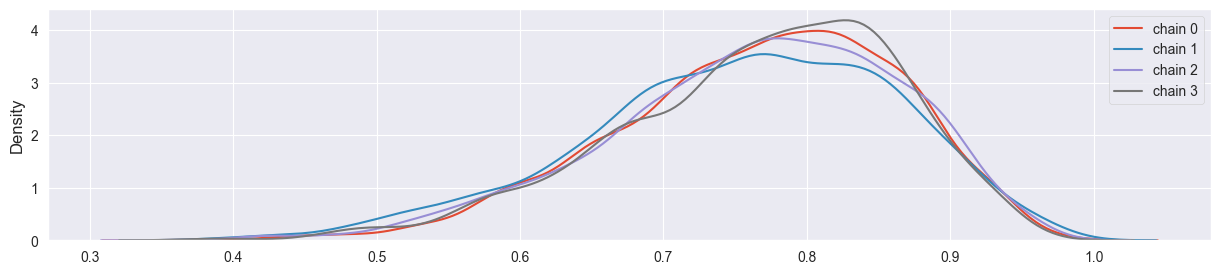

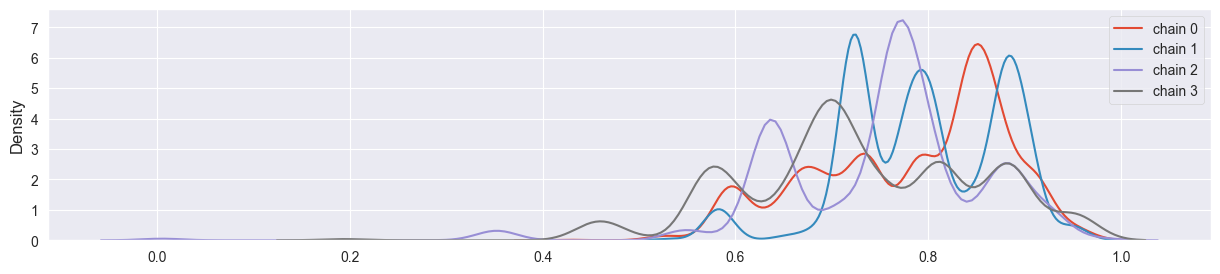

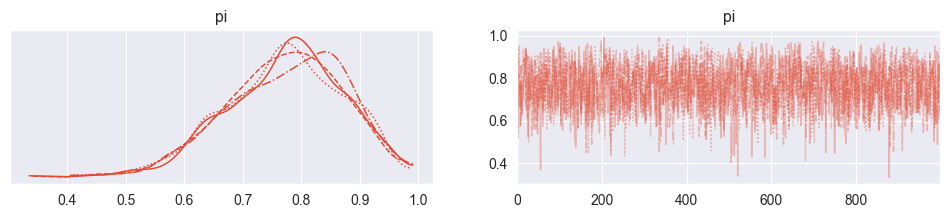

c) Density plots

Visualize all four chains in separate density plots (use e.g. seaborn.kde_plot()). Are they more or less in agreement? What happens with them if you set sigma too large or too small?

sigma just right:

def density_plots( sim ):

for i in range( sim.shape[1] ):

sns.kdeplot( sim[:,i], label="chain {}".format(i) )

plt.legend()

density_plots( sim )

Chains have converged quite well!

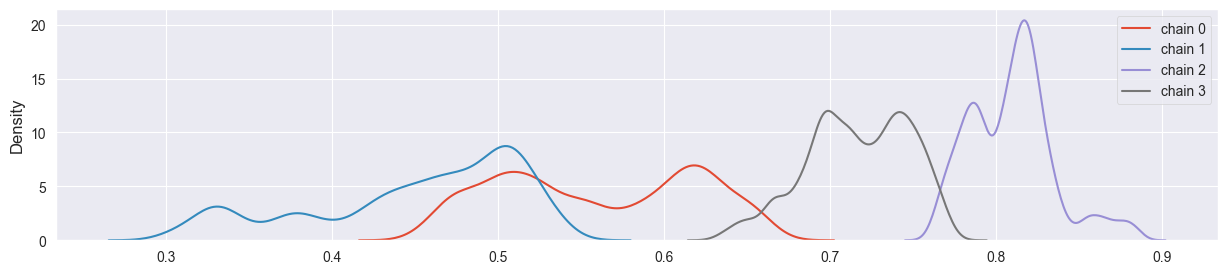

sigma too small:

sim = metropolis( 4, 4000, alpha, beta, n, k, sigma=0.002 )

density_plots( sim )

Chains have not converged yet and explore different regions of the parameter space.

sigma too large:

sim = metropolis( 4, 4000, alpha, beta, n, k, sigma=20 )

density_plots( sim )

Chains have not coverged - density plot is misleading, chains are stuck at single points.

d)

In the lecture we looked at the quantitative measure to indicate how well the four chains converged towards each other. Compute

for your output, where is the list of values in chain and is the concatenation of all .

What’s the value of for your optimal sigma? How does it change if you make sigma too small or too high? Is enough to distinguish good from bad chains?

def rhat( sim ):

total_var = np.var( sim.flatten() )

individual_var = np.var( sim, axis=0 )

rhat = np.sqrt( total_var / np.mean(individual_var) )

return rhat

sim = metropolis( 4, 4000, alpha, beta, n, k, sigma=0.2 )

rhat( sim )1.00017800332027Very close to 1, this is a sign for a healthy chain!

sigma too small:

sim = metropolis( 4, 4000, alpha, beta, n, k, sigma=0.002 )

rhat( sim )1.2210161637389108Rhat is much larger than 1 - this is a sign for a very unhealthy chain!

sigma too large:

sim = metropolis( 4, 4000, alpha, beta, n, k, sigma=20 )

rhat( sim )1.0087806933180763Rhat is quite small - however the chains have not converged well! Therefore Rhat alone cannot be used as a single diagnostic, evidence of the unhealthiness can only be found in the trace plot in this case (also an autocorrelation plot and effective sample size could be valuable tools here).

e) Burn-in phase

Is the burn-in phase typically required by the Metropolis algorithm relevant for your beta-binomial problem? Argue using your trace plots.

Let’s come back to our healthy chain:

sim = metropolis( 4, 4000, alpha, beta, n, k, sigma=0.2 )Trace plot of first chain (only beginning):

plt.plot( sim[:300,0] )

Since the beta-binomial posterior has a very smooth, one-dimensional probability surface, the Metropolis algorithm finds the mode quickly and appears to require only around 10 samples of burn-in time (depending on the initial value). In the overall size of the chain the burn-in phase can be neglected in this case. This is different for more complicated, multivariate problems!

Exercise 3: Doing the same with PyMC¶

a) MCMC with PyMC

Reproduce the results from the previous exercise with PyMC (see lecture notes) to demonstrate that PyMC does almost everything for you, except from specifying prior and likelihood of course.

import pymc as pm

with pm.Model() as beta_binom_model:

pi = pm.Beta( 'pi', alpha=alpha, beta=beta )

y = pm.Binomial( 'y', p=pi, n=n, observed=k )

trace = pm.sample(1000)PyMC gives you 4 chains by default and is using the NUTS algorithm in this case.

b) Investigate the output of pm.sample()

How can you access the samples for ?

The output of PyMC is an xarray object. Let’s have a look at it:

tracetrace.posteriorsim = trace.posterior.pi.values

sim.shape(4, 1000)This gets us back the values in the chain we produced previously.

c) Produce diagnostic artefacts and check them

Produce the following diagnostic artefacts and check whether they look alright (using the PyMC-internal functions or the arviz equivalents):

trace plot + density plot

autocorrelation plot

effective sample size (ESS)

Finally, if you have graphviz installed, use pm.model_to_graphviz() to produce a graphical representation of your very simple model. What is the meaning of the arrow?

Trace and density plot:

pm.plot_trace( trace )array([[<Axes: title={'center': 'pi'}>, <Axes: title={'center': 'pi'}>]],

dtype=object)

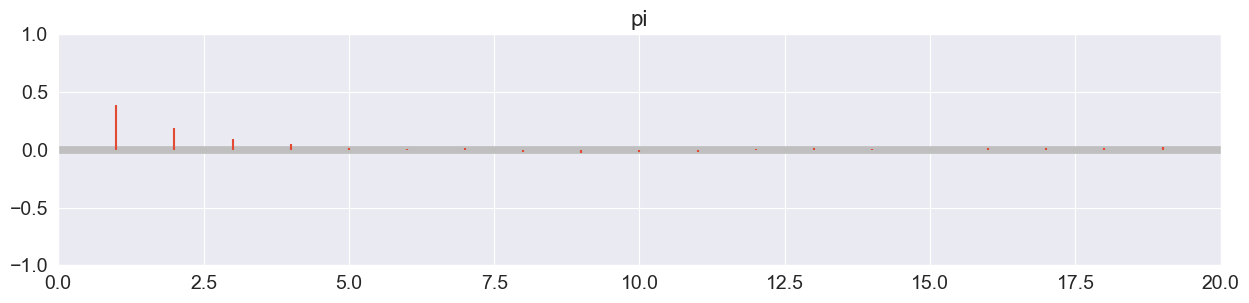

Autocorrelation plot (all chains combined):

pm.plot_autocorr( trace, combined=True, max_lag=20 );

Drops off extremely quickly!

Effective sample size (ESS):

ess = pm.ess( trace ).pi.values

essarray(1497.64862822)Considerably lower than 4000! ESS ratio:

ess / 40000.3744121570554813Much larger than the required 10%!

Rhat:

pm.rhat( trace ).pi.valuesarray(1.00282741)Looks good! (<< 1.05)

Model graph:

pm.model_to_graphviz( beta_binom_model )Arrow: the likelihood Bin depends on (that is modelled by a prior).

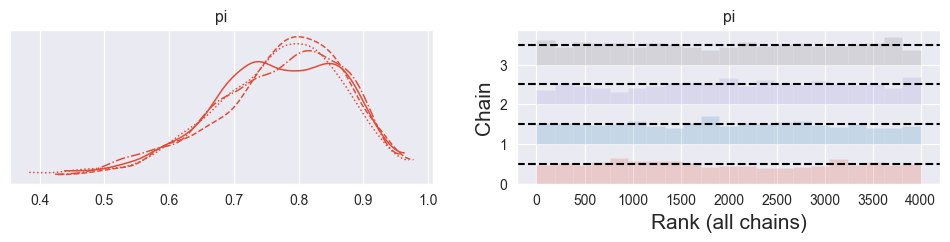

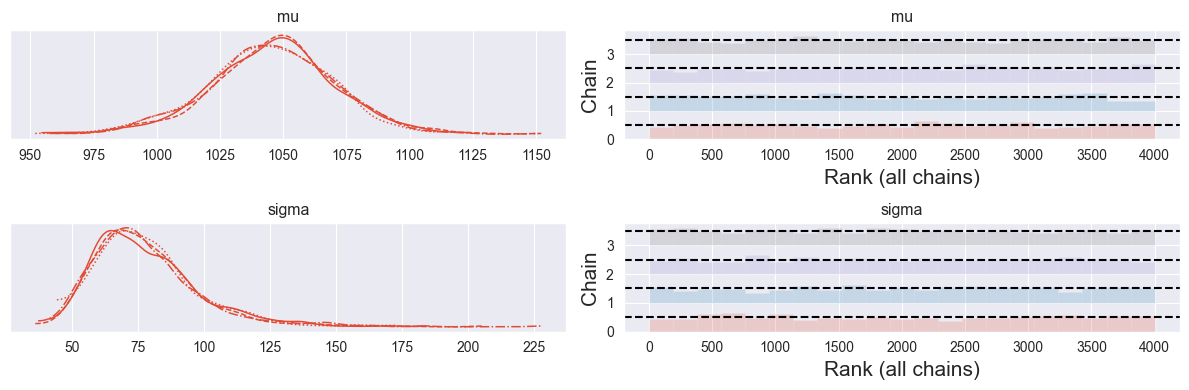

d) Investigate the rank plot

Some practitioners argue that a rank plot (or trank plot) is better suited to spot bad chains than a trace plot. Investigate what a rank plot is and find out how you can produce it with PyMC. Does it look good for your simulation?

pm.plot_trace( trace, kind="rank_bars" )array([[<Axes: title={'center': 'pi'}>,

<Axes: title={'center': 'pi'}, xlabel='Rank (all chains)', ylabel='Chain'>]],

dtype=object)

The rank (or trank) plot does the following:

Rank the samples from all chains from 1 to 4000 (number samples used here)

For each chain individually, produce a histogram of its ranks

For healthy, well-mixing chains, the histograms should be uniform. A spike indicates that the chain got stuck somwhere (producing too many samples of one particular value of . Any other asymmetries indicate that the chains did not explore the same regions of the parameter space.

The rank plot above looks good!

More details: Vehtari, Gelman, Simpson, Carpenter, Bürkner. 2019. Rank-normalization, folding, and localization: An improved R-hat for assessing convergence of MCMC. https://

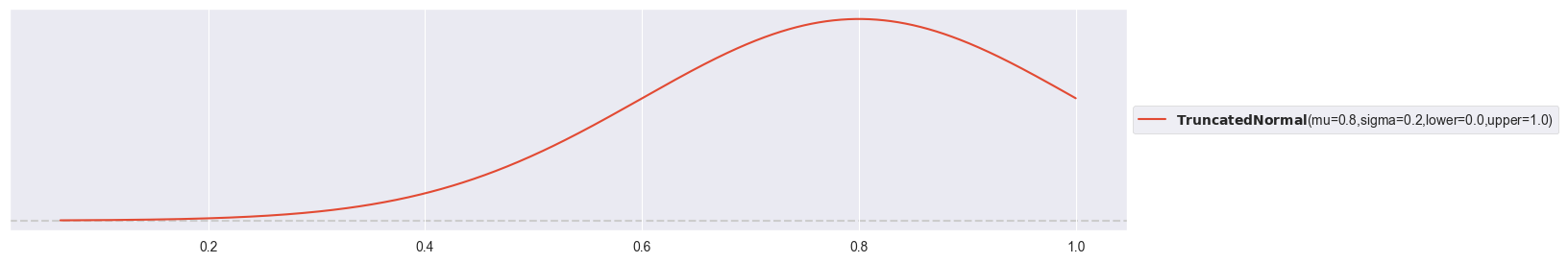

e) Compare truncated normal prior to beta prior

A coworker of yours argues that a truncated normal distribution with and , truncated between 0 and 1, would describe his prior belief better. Introduce it to your simulation and argue that it does not make a big difference which of these two priors you use. In particular:

do some research to find out what a truncated normal distribution is.

use preliz to visualize your beta prior and your coworker’s truncated normal prior and quickly compare them visually.

run a new simulation using your coworker’s prior and compare the density plots of both simulations.

use PyMC’s

summary()function to give your coworker a quick comparison. Does it matter a lot which of the two priors you use? What about bulk ESS and tail ESS? (what are they, what does it mean when they are different?)

Plot truncated normal prior:

pz.TruncatedNormal( mu=0.8, sigma=0.2, lower=0, upper=1 ).plot_pdf()<Axes: >

Plot previous prior:

pz.Beta( alpha=alpha, beta=beta ).plot_pdf()<Axes: >

Run simulation with truncated normal prior:

with pm.Model() as normal_binom_model:

pi = pm.TruncatedNormal( 'pi', mu=0.8, sigma=0.2, lower=0, upper=1 )

y = pm.Binomial( 'y', p=pi, n=n, observed=k )

trace2 = pm.sample(1000)Compare densities:

pm.plot_trace( trace2 )array([[<Axes: title={'center': 'pi'}>, <Axes: title={'center': 'pi'}>]],

dtype=object)

pm.plot_trace( trace )array([[<Axes: title={'center': 'pi'}>, <Axes: title={'center': 'pi'}>]],

dtype=object)

Compare summaries:

pm.summary( trace2 )pm.summary( trace )The resulting densities are very similar! It appears that the simulation with the truncated normal prior is numerically a bit less effective, with less effective samples both in the bulk (center of the distribution) and the tails (periphery). This means that the beta prior produces more accurate estimates at the same computational effort!

Exercise 4: Foundry¶

A company produces containers for foundries (‘Giessereien’) using ceramics and wants to know the maximum temperature such a container can tolerate and in particular also the uncertainty on it. Since finding out this maximum temperature destroys a container, as few measurements as possible should be made. Your team has started with 10 measurements and wants to know from you (as data scientist) to what accuracy you can make a statement about the average maximum temperature and its uncertainty.

The measurements are (in degrees Celsius):

and you consider the following model:

You have a prior expectation about (prior normally distributed) and (prior exponentially distributed) with C, C and C and assume a normal likelihood for your data. This is the first time that you see a model where we want to estimate multiple parameters instead of just one. Instead of defining one combined two-dimensional prior for and , we assume independence and define two individual priors. We will discuss this better in the lecture in week 5.

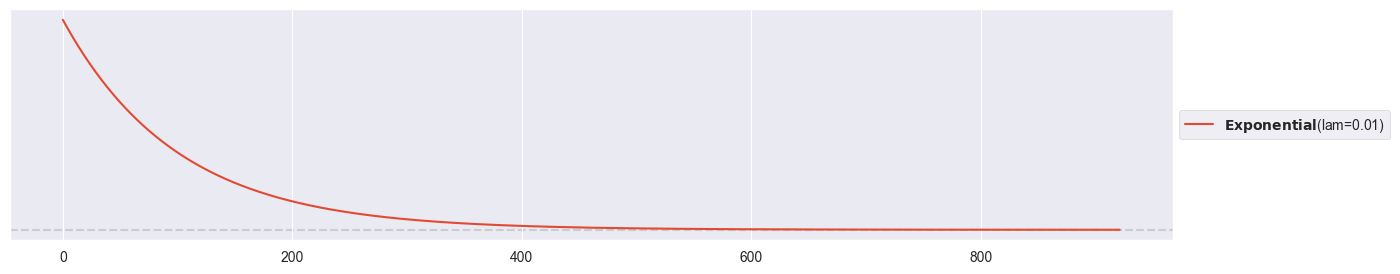

a) The Exponential Distribution

Read about the exponential distribution. What is the role of ? Does it favour lower or higher values than ? Visualize with preliz. Why would a normal distribution or a beta distribution not be a good prior for ?

pz.Exponential(1/100).plot_pdf()<Axes: >

pz.Exponential(1/100).mean()100.0If , then (see e.g. Wikipedia). Since the exponential distribution is right-skewed, prior values below C are favored to values above C.

A normal distribution can’t be used as prior for , since it could also produce negative values, and is strictly positive. A beta distribution is restricted to values between 0 and 1 and is most likely not. The exponential distribution suits well here, as it produces positive numbers.

b) Simulate with PyMC

Carefully formulate the simulation with PyMC and run it. Verify the convergence of your chain using a trank plot and compute posterior summaries with pm.summary(). What are your posterior expectations for and and how do they compare to your prior expectations?

observations = [1055, 1053, 1226, 967, 980, 1049, 1040, 1051, 1002, 1057]

with pm.Model() as normal_normal_model:

mu = pm.Normal( 'mu', mu=1000, sigma=100 )

sigma = pm.Exponential( 'sigma', 1/100 )

y = pm.Normal( 'y', mu=mu, sigma=sigma, observed=observations )

trace = pm.sample(1000)pm.plot_trace( trace, kind="rank_bars" )

plt.tight_layout()

Looks good!

pm.summary( trace )Your understanding of mean maximum temperature increased from to , while your understanding of uncertainty on this mean maximum temperature decreased from C to C.

c) Visualize your Model

If you have graphviz installed: Use pm.model_to_graphviz() to visualize your model. Can you relate it to the model description above?

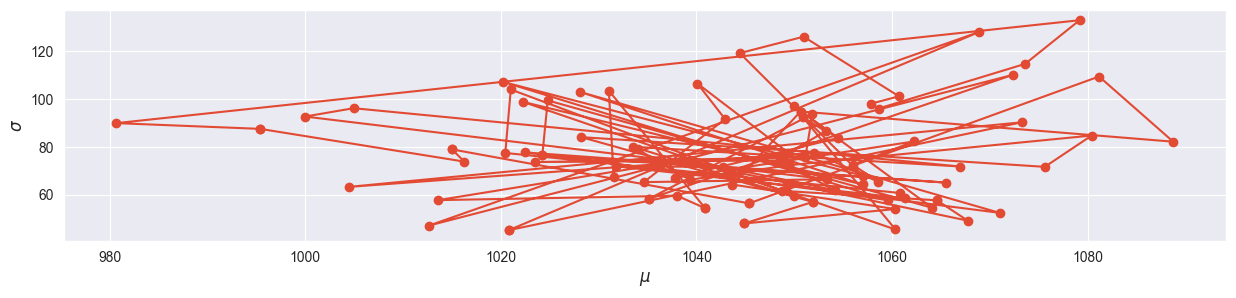

pm.model_to_graphviz( normal_normal_model )d) Visualize Parameters

Extract the first 100 samples for and from the first chain and visualize them against each other in a two-dimensional plot that shows the evolution of your chain. Can you see evidence for a warm-up phase? Note: Advanced MCMC algorithms do not have a burn-in phase (finding the mass of the posterior), they have a warm-up phase (automatically adjusting the hyperparameters).

Extract Numpy arrays for mu and sigma:

mu_chain = trace.posterior.mu.values

sigma_chain = trace.posterior.sigma.values

mu_chain.shape, sigma_chain.shape((4, 1000), (4, 1000))2D plot of parameter space:

plt.plot( mu_chain[0,:100], sigma_chain[0,:100], marker="o" )

plt.xlabel("$\mu$")

plt.ylabel("$\sigma$")

The warm-up phase can clearly be located away from the mode!